[Editorial] Harnessing the AI Era with Breakthrough Memory Solutions

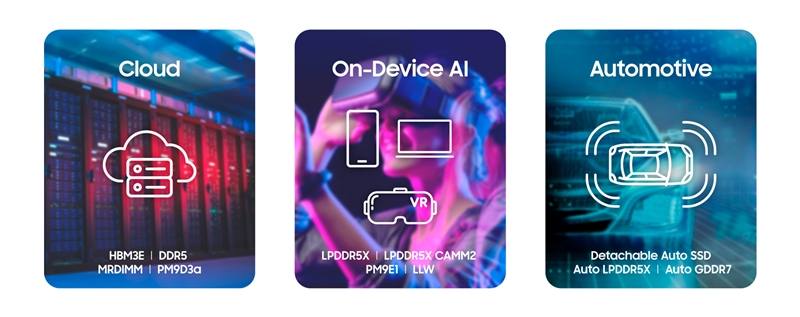

Capabilities of AI are approaching those of human intellect as AI-related hardware and software proliferate. To respond to the Hyperscale AI market, Samsung is bringing a variety of memory portfolios based on application-specific requirements to market, such as DDR5[1], HBM[2] and CMM[3]. All of these solutions will power the wide range of upcoming AI technologies. And at CES® 2024, Samsung will showcase a number of cutting-edge memory solutions for the AI era and compelling technological capabilities that demonstrate its role as an industry leader.

.

Redefining Boundaries for AI Technologies

The ways in which AI is fundamentally transforming our lives are already apparent. At work, it is maximizing productivity in documentation and data analysis. At home, it is making our lives easier and more enjoyable through an increasing number of convenient services. It would not be an overstatement to say that generative AI services like ChatGPT have ushered a new paradigm into our lives.

Although AI first started in the cloud, it is rapidly expanding to other applications and platforms. Implementing on-device AI on smartphones, computers and automobiles is necessary for a number of reasons, including security and responsiveness, and technical assessments are actively underway to equip these products with sufficient computing and memory.

Along with Samsung’s core portfolio, we are proud to introduce technology trends and memory requirements in the three areas that are the most critical today: cloud, automotive and on-device AI for PCs, clients and mobile devices.

AI Innovations in the Cloud

Datacenters are core infrastructure for AI-related computational processing and seamless services. ChatGPT, Bard and Bing Chat are some well-known examples of services provided through datacenters. With billions of parameters and tons of data coming and going in a short period of time, bottlenecking is a primary concern and may prevent the GPU from being fully utilized, particularly when there is insufficient memory bandwidth and capacity.

When it comes down to it, the focus in running a datacenter that services such AI applications is total cost of ownership (TCO). The industry is continuously looking to reduce TCO, and demand for memory performance and capacity is becoming especially diverse. In order to address these needs, Samsung has developed an array of optimized solutions for cloud applications.

HBM3E Shinebolt:

Currently at the center of attention as memory for AI would be HBM3E. It utilizes Samsung’s 12-layer stacking technology to provide a bandwidth of up to 1,280GBps and a high capacity of up to 36GB. In comparison to the previous generation, HBM3, HBM3E’s performance and capacity have been improved by over 50% which is expected to fulfill the memory requirements in the Hyperscale AI era.

32Gb DDR5 DRAM:

Last September, Samsung became the first in the industry to develop a high-capacity 32Gb DDR5 product based on 12nm-class process technology. Currently, we are providing major chipset companies and customers with samples. With this breakthrough, it is possible to implement high-capacity 128GB modules within the same package size, without using TSV[4]. When applying TSV, it supports modules of up to 1TB. In addition, compared to existing 16Gb-based 128GB modules, the 32Gb DDR5 has improved power consumption by about 40% and supports data transfer speeds of up to 7.2Gbps. Thanks to these enhancements, DDR5 32Gb is well-positioned to be a core solution for high-capacity servers used for generative AI.

MRDIMM:

Samsung has developed MRDIMM[5] that performs twice as well as existing RDIMM by doubling the data transmission channels. The product supports a speed of 8.8Gbps and is expected to be actively adopted for AI applications that require high-performance memory, such as HPC.[6] Samsung has completed MRDIMM verification with major chipset companies in the second half of 2023 and will leverage 32Gb DDR5 to provide a lineup of MRDIMM products with a differentiated strength in performance, capacity and power consumption.

PM9D3a:

PM9D3a is a SSD product based on an 8-channel controller that supports PCIe 5.0. Compared to its predecessor, its sequential read speed has been improved by up to 2.3 times, and its power efficiency — with a 60% improvement — is an industry best. Last year, Samsung developed products with 7.68 and 15.36TB capacities in the 2.5-inch form factor. In the first half of this year, Samsung will present a variety of lineups and form factors that range from low-capacity drives below 3.84TB to those up to 30.72TB. These products will be used in various new server systems and enable high-performance storage experiences.

.

High-Performance, Low-Power Solutions for On-Device AI

To implement certain AI services on the device level, multiple AI models must be saved and processed on the device itself. To achieve this, AI models saved in storage need to be loaded to memory in less than a second. The larger the AI model size, the more accurate the results. This means that to deliver seamless on-device AI services, the device itself also must have high-performance, high-capacity memory.

LPDDR5X DRAM:

Samsung is developingan LPDDR5X product based on the LPDDR[7] standard that achieves data transfer speeds of up to 9.6Gbps. Using High-K/Metal Gate (HKMG) technology[8], power efficiency has been improved by 30% compared to the previous generation. It also provides standard packaging solutions to optimize the portfolio for each application.

LPDDR5X CAMM2:

Last September, Samsung developed an industry-first 7.5Gbps LPDDR5X CAMM2[9] based on LPDDR DRAM and is currently providing key partners with samples while undergoing verification. This product is expected to be a game changer in the PC and laptop DRAM market, and Samsung is in discussions with major customers to expand applications to AI, HPC, servers, datacenters and more.

LLW DRAM:

Low Latency Wide I/O (LLW) DRAM is a solution optimized for not only low latency, but also ultra-high performance of 128GB/s. It operates on a remarkably low power draw of 1.2pJ/b[10], and since on-device AI requires AI models to respond instantly, it is an ideal solution suitable for operating AI models on the device level.

PM9E1:

Set to be developed this June, PM9E1 is an SSD for PC/client OEMs that supports PCIe 5.0 and is based on an eight-channel controller. Compared to its predecessor, its sequential read speed is twice as fast and it comes with a 33% improvement in power efficiency. It is expected to be a key product for on-device AI, as it can transfer large language models (LLMs) to DRAM in less than one second.

.

Detachable AutoSSD Is Poised To Lead the Automotive Memory Market by 2025

With autonomous driving becoming more advanced, the structure of vehicle systems is changing from a distributed structure with multiple vehicle ECUs[11] to a centralized structure in which each area has integrated control functions. As a result, there is a growing need for not only high performance and high capacity, but also Shared SSDs that can pool data with multiple systems-on-chips (SoCs).

With the goal of becoming the top automotive memory solutions provider by 2025, Samsung has been introducing a variety of new products and technologies for automotive applications.

Detachable AutoSSD:

This is the world’s first detachable SSD for automotive applications and a product that can be used by multiple SoCs through partitioning and storage virtualization. Compared to the existing product, capacity is improved by four times from 1TB to 4TB, and random write speed is improved by approximately four times from 240K IOPS to 940K IOPS — all in a detachable E1.S form factor. Samsung is currently holding discussions on detailed specifications with major automobile manufacturers in Europe and tier 1 customers, and the company plans to complete proof of concept (PoC) technical verification within the first quarter of this year.

.

Laying the Groundwork to Leap Into the Future

Last December, Samsung formed the Memory Product Planning Office to better respond to rapidly changing technologies and market environment in an agile manner. And not It is accelerating its efforts to prepare for the future in full swing.

Pioneering the Future of Memory

The Product Planning Office will act as an “Expert Organization of Business Coordinators” that unifies multiple departments to cover the production process from start to finish and closely cater to customer needs.

All business functions like sensing, trend analysis, product planning, standardization, commercialization and technical support are now under the same roof. With these functions in one place, Samsung is better positioned to solidify its technology leadership in the memory market and serve as an internal and external control tower by:

- Analyzing technology trends in order to plan competitive products with distinctive competitiveness,

- Taking rapid changes into consideration when managing product development, and

- Actively responding to individualized customer needs.

In addition, based on our mid- to long-term roadmap, we plan to focus even more on our new leap forward into the future. This includes working alongside our R&D teams to accelerate development of fundamental technologies.

.

Future Solutions and New Businesses for Customer Needs

The explosive growth of AI requires radical advances in memory. High bandwidth, low power memory such as DDR6, HBM4, GDDR7, PCIe 6.0, LPDDR6 and UFS 5.0[12] are necessary since they can support higher performance systems. The market will also require new interfaces and stacking technologies such as CMM, a CXL-based memory, and advanced packages. Samsung will continue to lead the way in changing the memory paradigm by discovering new solutions, such as custom HBM, and computational memory, as well as new businesses.

Custom HBM DRAM: The Key to Innovation in Memory Technology

With the growth of AI platforms, customers’ specific needs regarding HBM — such as capacity, performance and specialized functions — are also on the rise. To respond to these demands, Samsung is working closely with major datacenter customers and CPU/GPU leaders. The custom HBM DRAM is the key to innovation in memory technology and will serve as a breakthrough to overcome the technological limitations of memory semiconductors in the future.

Starting with the next-generation HBM4, Samsung plans to utilize advanced logic processes for buffer dies to respond to customers’ individualized needs. Between Memory, Foundry and System LSI, Samsung holds a unique advantage in terms of its comprehensive capabilities. These capabilities, along with its next-generation DRAM process and cutting-edge packaging technology, are the foundation for providing optimal solutions in line with new market changes in the future.

CMM: Leading the AI Era

CMM-D[13] can expand bandwidth and capacity alongside existing mainstream DRAM, making it an attractive option in next-generation computing markets that require high-speed data processing. This includes fields like artificial intelligence and machine learning.

Samsung leads the industry with the world’s first CMM-D technology being developed in May, 2021, followed by the industry’s highest capacity 512GB CMM-D and CMM-D 2.0. As the only company that can currently supply samples of 256GB CMM-D, it is also collaborating closely with various partners to build a robust CXL memory ecosystem.

PIM: A New Memory Paradigm

PIM[14] is a next-generation convergence technology that adds the processor functions necessary for computational tasks that take place within memory. It can improve energy efficiency for AI accelerator systems by reducing data transfer between the CPU and memory.

In 2021, Samsung introduced the world’s first HBM-PIM. Developed in collaboration with major chipset companies, PIM has approximately doubled performance and reduced energy consumption by around 50% on average, compared to the existing GPU accelerator. To expand PIM applications, Samsung is speeding up the commercialization of HBM-PIM for AI accelerators and LPDDR-PIM for on-device AI.

PBSSD as a Service: A High-Capacity SSD Subscription Service That Goes Beyond Capacity Limits

“PBSSD as a Service” is a business model in which customers use services instead of purchasing a server configured with SSDs. As a high-capacity SSD subscription service, it is expected to contribute to lowering the initial investment cost of customers’ storage infrastructure, as well as maintenance costs, by providing customers with a petabyte-scale box that functions as memory expansion.

.

Fostering Solid Global Partnerships with Customers

Memory is facing another technological inflection point in that the growth potential of semiconductors in the AI era is large and endless. In order to develop new products and markets, it is essential to develop and maintain strong cooperation with partners and customers around the world.

Samsung Memory Research Center: Infrastructure for Customer Collaboration

The Samsung Memory Research Center (SMRC) is a platform that provides infrastructure for customers equipped with Samsung’s memory products to analyze the optimal combination of hardware and software of their servers and evaluate performance. The platform enables Samsung to facilitate differentiated cooperation with customers and prepare for the next generation of memory.

Last year — in an industry first — Samsung partnered with VMware to implement virtualization systems, verifying that PM1743, a PCIe Gen5 SSD, delivers optimal performance in VMware solutions. The company has solidified additional partnerships with software vendors through SMRC, including completing verification of CXL memory operations in Red Hat’s latest operating system for servers.

TECx: Near-Site Technical Support

Samsung has been operating its Technology Enabling Center (TEC) in key locations to provide seamless technical service and collaborate closely with global customers. The company has gradually been expanding service regions and established a TEC extension (TECx) in Taiwan last year.

Internally, TECx supports system certification by verifying new products and technologies such as DDR5, LPDDR5X, CMM and Gen5 SSDs at the forefront. Externally, it enhances customer relationship management by enabling immediate technical support locally and we expect it will continue to lead closer technological collaborations with customers.

.

It’s 2024, and the semiconductor market is changing faster than anyone could imagine. As a leader in future memory technology, Samsung will continue to take bold challenges head on. With our relentless innovation and strong partnerships, we are steadfast in our commitment to optimizing the AI era for all.

[1] Double Data Rate 5 (DDR5) is a random-access memory (RAM) specification.

[2] High Bandwidth Memory (HBM) is a high-performance RAM interface for 3D-stacked synchronous dynamic RAM (SDRAM).

[3] The Compute Express Link™ (CXL™) memory module (CMM) base standard is designed to help simplify system design and minimize industry confusion for first adoption.

[4] Through-silicon via (TSV) is a vertical electrical connection that passes completely through a silicon wafer or die.

[5] MRDIMM (multiplexed buffered DIMM) combines two DDR5 DIMMs on a single module to effectively double the bandwidth.

[6] High-performance computing (HPC) is the ability to process data and perform complex calculations at high speeds.

[7] Low-Power DDR (LPDDR) consumes less power and is typically used in mobile applications.

[8] Dielectric is an insulating substance applied to microprocessor gates to prevent leakage and is traditionally made of silicon dioxide. However, a hafnium-based dielectric layer can be combined with a gate electrode composed of alternative metal materials. The result is a high dielectric constant, otherwise known as a high-K.

[9] CAMM refers to Compression-Attached Memory Module, and CAMM2 is a JEDEC-published common standard.

[10] pJ/b refers to picojcule consumed per bit.

[11] An electronic control unit (ECU) is a device that controls one or several electrical systems in a vehicle.

[12] Universal Flash Storage (UFS) is a flash storage specification typically used in mobile applications and consumer devices.

[13] CXL Memory Model-DRAM.

[14] Processing-in-memory (PIM) is an approach that combines processor and RAM on a single chip for enhanced computation.